Published: Aug 22, 2023

Tree of Thoughts is a prompt engineering method that improves upon the Chain of Thoughts concept and it combines it with elements from Self Consistency with CoT and iterative refinement . It is a formalization of what has been referred to as giving the model time to think in the informal prompting world.

The ‘Tree of Thought’ prompts are rooted in the observation that thoughts and ideas are not linear. Instead, they branch out, much like trees. These thoughts often stem from a core concept and diverge into various branches of related yet distinct ideas.

The prompts guide the language model along a pre-defined ‘tree’ of interconnected ideas or steps. Instead of posing a broad question to the model, it’s led through a series of connected, more specific prompts, akin to a natural conversation’s progression. We’re asking the model at each level, for each branch to score the paths it can take and then make decisions on where to go next. Then the large language model should eliminate some branches and consider only the “good paths”.

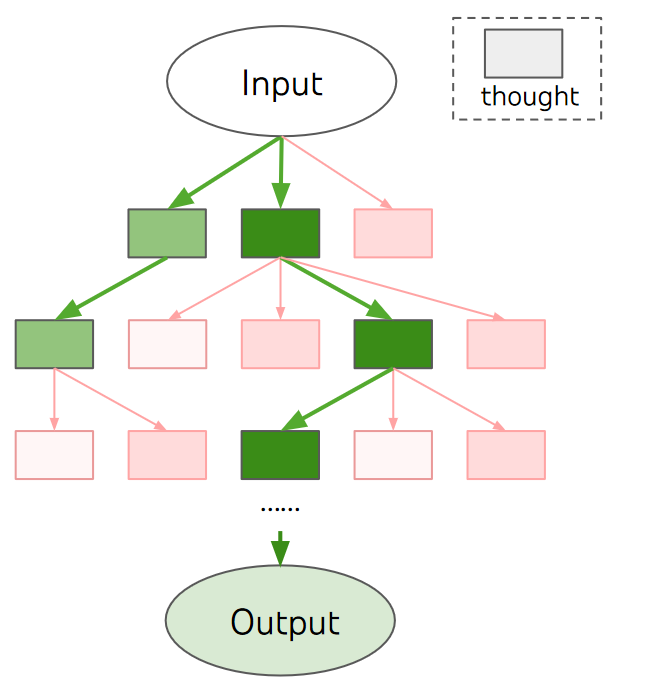

See the diagram from the paper below. Each reddish leaf are “dead thoughts”, where the language model decided to not pursue the branch further at this level. The green leaves are “good thoughts”, branches that were considered further, the darker the shade the longer that path was considered and explored in the tree of thoughts for the given prompt.

For example, let’s say you want to find the best way to improve productivity. Instead of asking the model a single question like “How can I improve productivity?”, you could use the Tree of Thought approach by breaking the question down into smaller, connected prompts, enable the model to “logically guide itself”:

- “List some factors that affect productivity.”

- “For each factor, suggest ways to improve it.”

- “Evaluate the effectiveness of each suggested improvement.”

- “Rank the improvements based on their potential impact on productivity.”

Examples of Tree of Thoughts Prompting

Here are three more detailed examples of Tree of Thoughts prompting to help you better understand the concept:Example 1: Planning a vacation Instead of asking the model, “How should I plan my vacation?”, you could break the question down into smaller, connected prompts:

- “List some popular vacation destinations.”

- “For each destination, describe the main attractions and activities.”

- “Compare the costs and travel times for each destination.”

- “Based on the information, recommend the best destination for a 7-day vacation.”

Example 2: Choosing a career pathInstead of asking the model, “What career should I choose?”, you could use the Tree of Thought approach with the following prompts:

- “List some industries that align with my interests and skills.”

- “For each industry, describe the job opportunities and potential career paths.”

- “Evaluate the job market and future growth prospects for each industry.”

- “Based on the analysis, suggest a suitable career path for me.”

Example 3: Solving a math problem Instead of asking the model to solve a math problem directly, you could guide the model through the problem-solving process with connected prompts:

- “Identify the type of math problem and the required steps to solve it.”

- “Explain the first step and provide the intermediate result.”

- “Continue with the next step and provide the updated result.”

- “Repeat the process until the problem is solved and provide the final answer.”

By using the Tree of Thoughts approach in these examples, you can guide the language model through a more structured and deliberate reasoning process, allowing it to explore different aspects of the problem and generate more comprehensive solutions.

Practical Application of ToT prompts

It can be applied to a variety of practical use cases. For example, when planning a neighborhood grocery store, the model can be guided through a series of prompts, from key considerations to layout design, pricing strategy, and marketing initiatives.

Shortcomings of Tree of Thought

While the Tree of Thoughts prompting strategy has yielded promising results and enabled models to answer and solve more complex reasoning problems, there are, like always still limitations. Tree of Thoughts requires more planning than direct prompts and might be overkill for simpler queries, in which case you may refer back to few shot and one shot prompting. Moreover, the model’s responses are still influenced by its initial training and inherent biases, which this strategy doesn’t negate

Criticism of the paper

I personally and others have a little bit of a concern of the amount of handholding that has been part of the in-paper examples. The extensive guidance provided to models like the one in the “Tree of Thoughts” paper raises questions about their independent problem-solving capabilities. Comparing such a model’s performance with earlier approaches like the Chain of Thought, under similar levels of guidance, would indeed be insightful. It would help in understanding how much of the problem-solving process is genuinely due to the model’s reasoning capabilities and how much is influenced by the specific instructions or frameworks provided by the researchers. This comparison could also reveal the inherent strengths and limitations of each approach, offering a clearer perspective on the advancement of AI problem-solving techniques.

Having said that, the Tree of Thoughts technique has later been used and applied by many follow ups and has consistently provided good results.

References

Tree of Thoughts: Deliberate Problem Solving with Large Language Models

Dave Hulbert Tree of Thought Prompting

Yannic Kilcher Tree of Thoughts: Deliberate Problem Solving with Large Language Models (Full Paper Review)